Hello everyone

With sqlbits, Easter holidays and a bunch of other stuff i haven’t posted in a while, but I’m back!

Full disclosure, this is a sponsored post, but for a good cause, you’ll see in a minute.

If you follow me on Twitter you’ll know I like swag almost as much as Johnny Winter, and man there was a LOT of swag at sqlbits!

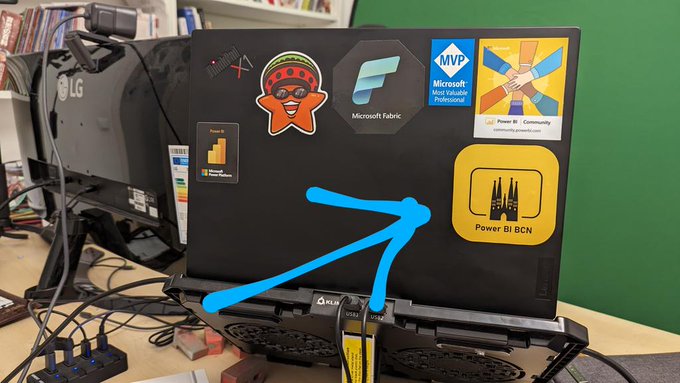

And among all the swag, there is a type of swag that every sponsor and plenty of attendees are into: stickers! Stickers are over the place. Many of the fellow attendees had also plenty of carefully curated collection of stickers on their laptops.

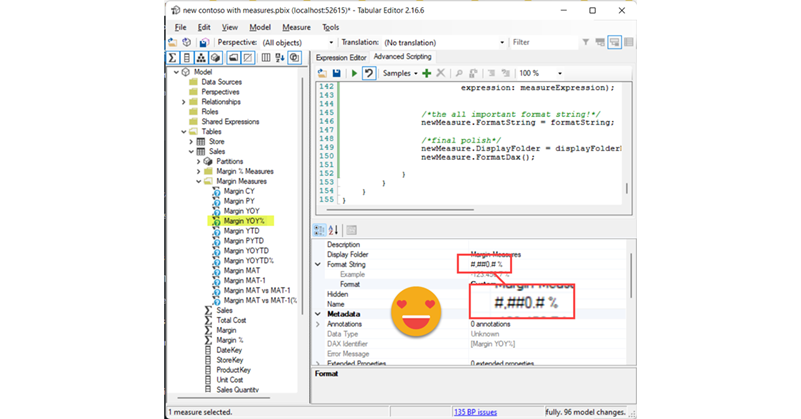

I have around 8 stickers so far on mine, very proud of each of them. The latest member is a sticker from Tabular Editor 3! But I have to confess that the one I’m most proud ofis the one of PUG Barcelona 😀

At PUG Barcelona we have a lively whatsapp group –285 members as of today– where the group exists everyday, so it’s not just the events. We share doubts and resources and we congratulate each other on new certifications or even new MVPs of the group (Mar Lizana just became the 6th current MVP of the group! along with Alex Ayala, Diana Aguilera, Iván Arribas, Ricardo Rincón and myself )

Coming back to the stickers… I have shared the super-short run of stickers I ordered at Stickermule with fellow organizers and I want to share similar stickers with those that come to speak at PUG Barcelona and ultimately, ALL members of PUG Barcelona

All these thoughts though go along with another thought… How am I going to pay for them? The super short run is one thing, but like my wallet, it does not scale. So when stickermule offered a nice amount of credit for a blog post with a link I thought it was an offer too good to pass. (Besides the link, the content of the blog post is completely free)

I did three-inch stickers and they look awesome but are a bit big for today’s laptops, so I think I’ll use the credit to create a bunch of two-inch stickers and give them out at PUG BARCELONA events.

If you want to speak at PUG Barcelona (either in-person or online) let me know! We’ll try to make it work, and if we do, you’ll get a sticker! Think about it!

And if you rather create your own stickers, follow this beautiful link!

–>> custom stickers <<–

Be good and take care!