Hello there, yes, a third part of the snapshot report and I’m not even sure it’s the last one. The thing is that since the last post there has been some major improvements on the set up that I thought are worth sharing. In my previous post I ended up with a small defeat. There were some combinations of filters that when I drilled through to see all the historic records of those order IDs I would not get any rows. Also my set up included duplicating the fact table which is a big no-no in most use cases and a shameful solution from a modeler perspective. Even though this was the best I had, I decided to present the topic on two events, one was the Data Community Day Austria 2023 and the other was @PowerBIEspanol Virtual Conf 2023 (Fin Tour Power BI Days), just a few days apart. The fact that I had to present the solution to a lot of people kept me thinking and looking for solutions, so with the help of the always reliable Ricardo Rincón I finally found out a working solution just duplicating dimension tables and creating dimension tables for everything (even comments and stuff like that). That was much better but not quite scalable. In real life things are ugly and tables have many columns. So while fighting with the same use case at work, I found a sneakier and much better solution that got rid of all those superfluous dimension tables reducing the need of them to just 2. While preparing the presentations I also worked a bit on the report layer and I’ll also share some techniques I came up with that can be helpful at some point. But enough of all this talking, let’s do what? Let’s get to it.

Well, I was happy with the result, but I was even more happy with the reaction on twitter. The dynamic header effect felt like magical to many people, and well, I have many more followers now, thank you all.

In case you haven’t seen it, here’s the video

So how did I do it? Short answer: Field parameters. A little longer answer: Measures and a calculation group create more measures that are put in field parameter with some customization.

Even longer answer? Let’s do it.

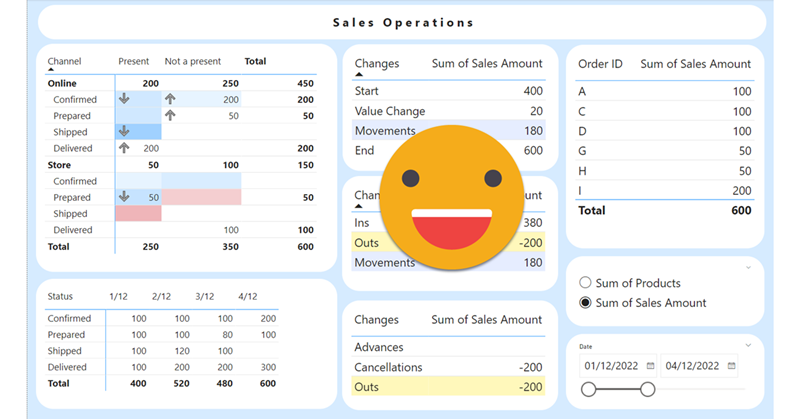

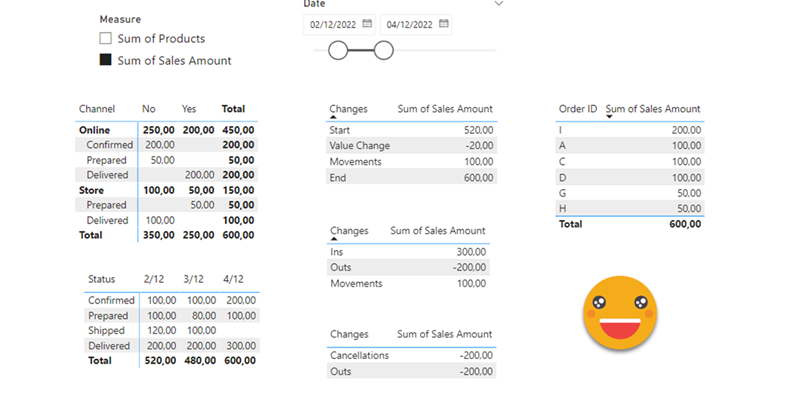

Hello again. You didn’t think there would be a second part, right? well, me neither. But as things turn out, I had to work on another report dealing with snapshots, and this time they wanted something fancier. It took me a while to figure it out, but I like the result so I thought it would be nice to share. In the first part, we just showed what went up and what went down, being able to go back and check any snapshot. However, in many use cases that does not tell the whole story. To explain why something went up or down, you need to show what went in, what went out (sometimes important to tell which way it went) and maybe even if the value changed between snapshots. If we just compare 2 consecutive snapshots is not that hard, but things get trickier we take longer time spans and we want to account for everything that happened in between.

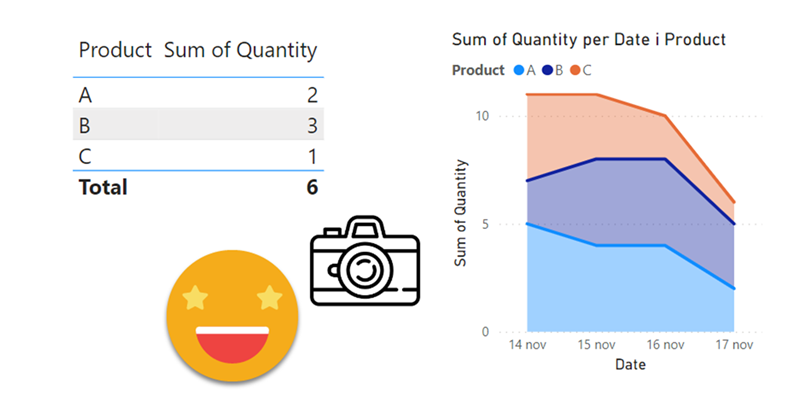

Lately I’ve been building a report, which had not happened for a while, and I enjoyed the process. The final result too is simple yet effective, so I thought I would share the approach here because, yes, there is a calc group in place that does some of the magic.

This approach is valid when you want to follow the status of something, warehouse inventory, accounts pending to be conciliated, parking occupation. In all these situations, you are likely to be more interested in the latest snapshot than the previous ones, but at the same time you might be interested in the trends that lead to the present picture, and maybe even go back to a previous shapshot to have a look, without too many clicks. As you can see we’ll need to be smart about the date filter. For some elements we want the last snapshot only for others all of them, and yet we want we want the charts to interact.

Well, hello again. If you just got started with Tabular Editor scrips and programming in general, maybe today’s topic might be a bit too much, but if you either familiar with other programming or already know your way in c# scripts, then this will certainly become a valuable thing: in-script classes! I know it sounds scary and not something that you need, but if you want write awesome code and make your coding life better in general, they you need to pay attention: In-Script classes for Tabular Editor C# Scripts are a thing.

Well, well, this is another article about C# Scripts. A topic that deserves much more attention than it’s getting. If you haven’t read my previous post, go do it right now because otherwise writing a c# script is a very frustrating experience. Not as much as building a Data Factory pipeline as a newbie, but almost.

If you have already programmed in other languages, many of this will be obvious, but anyway, is good to see how this applies to C# scripting for Tabular Editor. In this article we’ll talk about checking what is selected, checking what is in the model, interactions with the user and avoiding some of these interactions. Let’s get started!

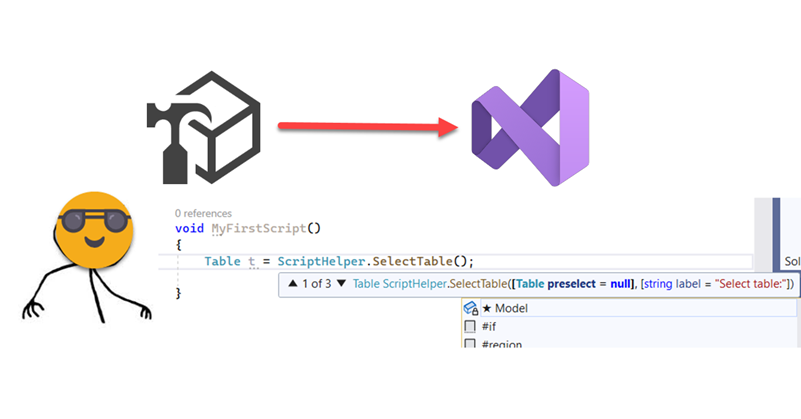

A few months back I wrote an article of what I thought would change the way I (and many more I thought) would write c# scripts from now on. In that article I explored the possiblity of creating a custom dll with all the classes and methods that would make my scripts shorter, more robust and more sophisticated all at once. The idea was cool but not quite convenient nor for development nor for distribution. However something good that came out of that is that my colleague showed me it was possible to have really good intellisense for Tabular Editor C# scripts inside Visual Studio! So even if you are not thinking about custom classes, you should definately be thinking on Visual Studio as your home for actual coding. Today I want to talk only on how to set it up so that it will not let you do anything that Tabular Editor 2 will not accept.

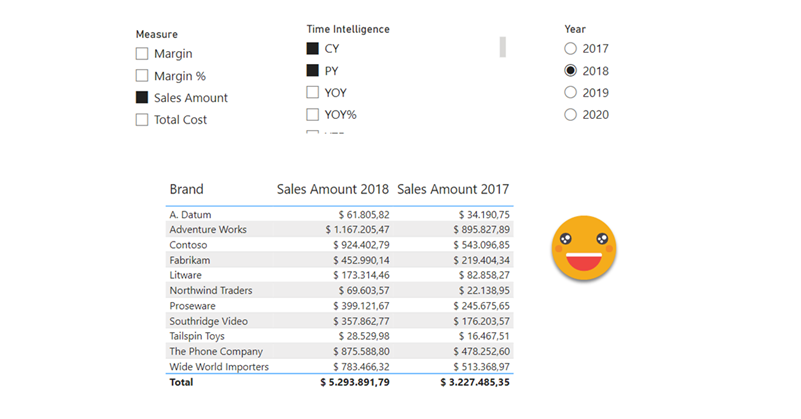

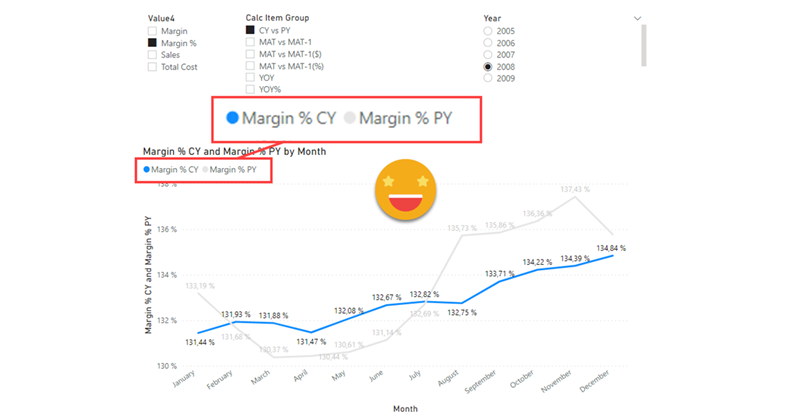

Well it’s no secret that I like calc groups. But it’s also true that calc groups have an achiles heel. Calc Item names are constant, so for charts, legends stay the same no matter what. Depending on your use case you might get away with it, but sometimes you might get an end user that will not buy it — and he or she might be an important person, so it’s always good to have a Plan B. Field Parameters have brought fresh air, and indeed are great for such use cases because it’s not like you modify the DAX of the measure in the chart, you actually change the measure, so the name shown in the legend also changes, which is great. However, what happens if you want to create a chart where the user can pick from 4 KPI’s and say 8 time intel calculations?? First you’ll have to create the measures, then the field parameter, then figure out how to model that for the user to be able to select measure and calculation intependently… I mean it’s not impossible, but looks long and boring. Let’s see what we can do about it!

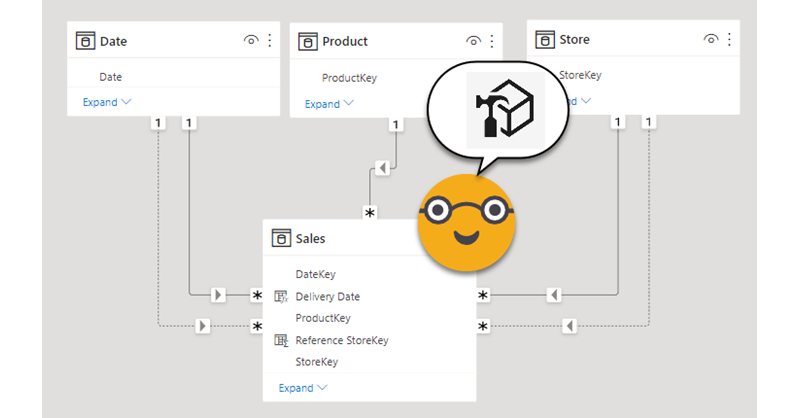

Hi, today I want to talk about inactive relationships. Those relationships with the dashed line that unless you invoque them with USERELATIONSHIP they do nothing. The thing is a common pattern if you read this blog — let’s say you have a retail model, and for some charts you need to use the order date and in other charts the delivery date. Other than that measures are the same, such as Sales amount, total cost, margin etc. Well, you could do a copy of each of the measures and wrap it in a CALCULATE( … USERELATIONSHIP(… ) ) or do a calculation group. Today we’ll do that, with style.

So today I recevied a question on the SML group which looked like the perfect use case for a calculation group. Somebody was trying to build a matrix and wanted to have the values without decimals but the totals with decimals. In other words the goal was defining different formats for values and totals. Without calculation groups, the only way you can get close to that is by using the function FORMAT, but that’s a dirty trick that will come back and get you because now your values are text. If you later use that measure in a SUMX expression for instance, it will not recognize it’s a number anymore. Of course you could keep a measure for the value and a different measure for the formatted value, but hmmm then you would have to do that for all measures that you might want to use this way and well, you would have to manage this sprawling number of measures. Let’s check both ways in case someone has external tools blocked or something.